Product Description

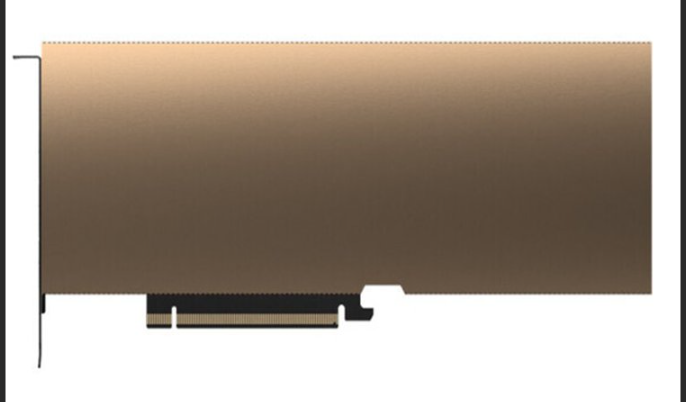

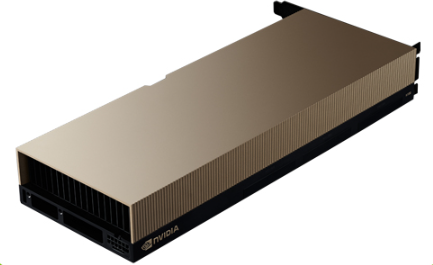

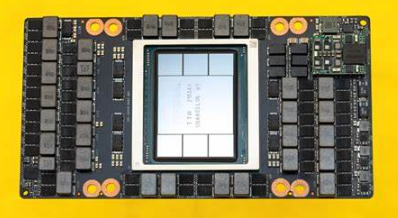

The H800NVIDIA ultra micro NV server is used for high-performance computing and deep learning tasks,providing excellent computing power and efficient data processing.

feature

NVIDIA GPU Accelerator

Ultramicro server architecture

High performance computing power

Efficient data processing capabilities

The H800 NVIDIA Ultra Micro NV Server is a server designed specifically for high-performance computing and deep learning tasks.It adopts an ultra micro server architecture and is equipped with a powerful NVIDIA GPU accelerator,providing excellent computing performance and efficient data processing capabilities.

The H800 NVIDIA Ultra Micro NV Server is a high-performance computing server suitable for various scientific research,artificial intelligence,and data analysis tasks.It has excellent computing and data processing capabilities,and can quickly process large-scale data and complex computing tasks.

Access top-notch AI software for your NVIDIA H800 Tensor Core GPU on mainstream servers.The NVIDIA AI Enterprise software suite is the operating system of the NVIDIA artificial intelligence platform,which is crucial for production ready applications built using NVIDIA’s extensive framework libraries such as voice AI,recommenders,customer service chatbots,etc.

The NVIDIA H800 PCIe GPU includes NVIDIA AI Enterprise software and support.

application area

The H800 NVIDIA ultra micro NV server is widely used in scientific research,artificial intelligence,image processing,data analysis,and other fields.It can provide powerful computing support,accelerate the processing of complex computing tasks,and meet the needs of users in high-performance computing.

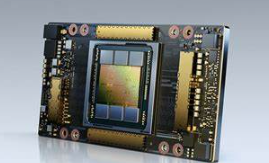

The United States has introduced new regulations on semiconductor export restrictions to China,including restrictions on the export

of high-performance computing chips to Chinese Mainland.And the performance indicators of NVIDIA’s A100 chip are used as limiting standards.A regulated high-performance computing chip is one that meets both of the following conditions:

of high-performance computing chips to Chinese Mainland.And the performance indicators of NVIDIA’s A100 chip are used as limiting standards.A regulated high-performance computing chip is one that meets both of the following conditions:

(1)The I/O bandwidth transmission rate of the chip is greater than or equal to 600 Gbyte/s;

(2)The sum of the bit length of each operation multiplied by the computing power calculated by TOPS in the”original computing unit of the digital processing unit”is greater than or equal to 4800 TOPS.This also makes it impossible for NVIDIA A100/H100 series and AMD MI200/300 series AI chips to be exported to China.

Reviews

There are no reviews yet.