Product Description

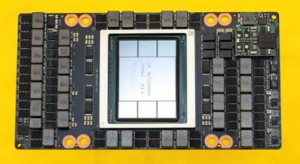

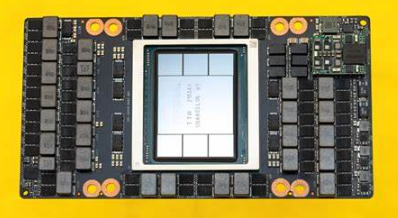

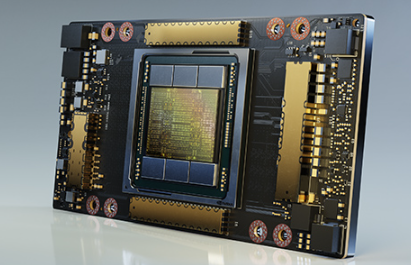

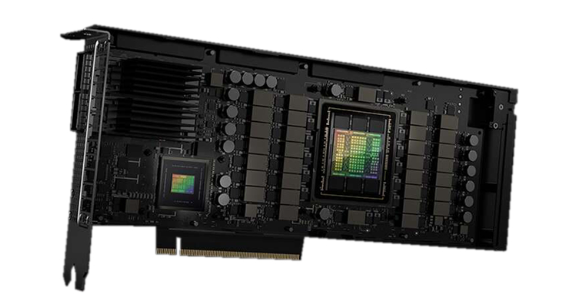

H100 is NVIDIA’s latest generation of ultra micro servers,designed specifically for high-performance computing and artificial intelligence applications.It supports up to 14 NVIDIA’s latest generation GPUs and has high scalability and availability.

feature

Powerful computing and graphics processing capabilities

Efficient energy utilization and heat dissipation design

Scalability and flexibility

The H100 NVIDIA Ultra Micro NV Server is a server designed specifically for high-performance computing and data processing.It adopts advanced NVIDIA technology,providing excellent computing and graphics processing capabilities,suitable for various scientific computing,artificial intelligence,and deep learning tasks.

The H100 NVIDIA ultra micro NV server has excellent performance and reliability.It is equipped with the latest NVIDIA graphics processor and efficient processor,capable of handling complex computing tasks and large-scale data processing.Its advanced heat dissipation design and energy utilization technology enable it to maintain stability and efficient performance under high load conditions.

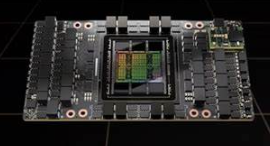

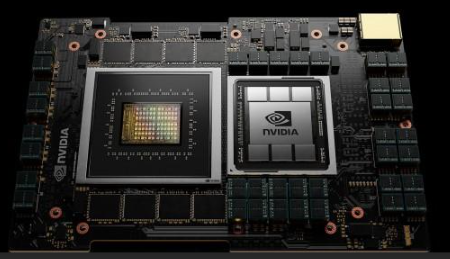

The NVIDIA H100 GPU uses the Transformer Engine with FP8 accuracy,providing up to four times the training speed for large language models compared to the previous generation GPU.The fourth generation NVIDIA NVLink can provide interconnection speed from 900 GB/s GPU to GPU,NVLink Switch system(which can accelerate collective communication across nodes for each GPU),PCIe 5.0,and Magnum IO®The combination of software can achieve efficient scalability from small enterprises to large unified GPU clusters.These infrastructure advancements,combined with the NVIDIA AI Enterprise software suite,make HGX H100 a powerful end-to-end AI and HPC data center platform.

AI solves a wide range of business challenges using equally extensive neural networks.An excellent AI inference accelerator must not only provide high performance,but also have the versatility required to accelerate these networks in any location chosen by customers,from the data center to the edge.

The HGX H100 further expands NVIDIA’s leading position in deep learning reasoning in the market,with reasoning speed on the Megatron 530 billion parameter chat robot increasing by 30 times compared to the previous generation of products.

The HGX H100 has increased the floating-point operations per second(FLOPS)of the dual precision Tensor Core by three times,providing HPC with up to 535 TeraFLOPS in an 8-card GPU configuration and 268 TeraFLOPS in a 4-card GPU configuration.HPC applications that integrate AI can also utilize the TF32 accuracy of H100 to achieve a throughput of nearly 8000 TeraFLOPS for single precision matrix multiplication operations with zero code changes.

The H100 adopts the DPX instruction set,which can improve the speed of dynamic programming algorithms(such as Smith Waterman for DNA sequence alignment and protein alignment to predict protein structure)by 7 times compared to GPUs based on NVIDIA Ampere architecture.By improving the throughput of diagnostic functions such as gene sequencing,H100 can provide accurate and real-time disease diagnosis and precise drug prescriptions for each clinic.

Data centers are new computing units,and networks play an indispensable role in expanding application performance.Paired with NVIDIA Quantum InfiniBand,HGX provides world-class performance and efficiency,ensuring the full utilization of computing resources.NVIDIA Quantum InfiniBand relies on network computing acceleration,remote direct memory access(RDMA),and advanced quality of service(QoS)capabilities.

For AI cloud data centers deploying Ethernet,HGX is most suitable for use with the NVIDIA Spectrum X network platform,which can provide high AI performance on 400 Gb/s Ethernet networks.Spectrum X adopts NVIDIA Spectrum™-4 switches and BlueField-3 DPUs can provide consistent and predictable results for thousands of synchronous AI jobs on various scales by optimizing resource utilization and performance isolation.Spectrum X supports advanced cloud multi tenant and zero trust security.With Spectrum X,cloud service providers can accelerate the development,deployment,and time-to-market of AI solutions while increasing returns.

application area

The H100 NVIDIA ultra micro NV server is widely used in scientific research institutions,large enterprises,educational institutions,and other fields,for tasks such as high-performance computing,artificial intelligence,deep learning,and big data analysis.

The United States has introduced new regulations on semiconductor export restrictions to China,including restrictions on the export of high-performance computing chips to Chinese Mainland.And the performance indicators of NVIDIA’s A100 chip are used as limiting standards.A regulated high-performance computing chip is one that meets both of the following conditions:

(1)The I/O bandwidth transmission rate of the chip is greater than or equal to 600 Gbyte/s;

(2)The sum of the bit length of each operation multiplied by the computing power calculated by TOPS in the”original computing unit of the digital processing unit”is greater than or equal to 4800 TOPS.This also makes it impossible for NVIDIA A100/H100 series and AMD MI200/300 series AI chips to be exported to China.

Reviews

There are no reviews yet.