Product Description

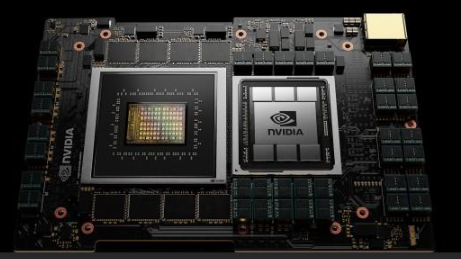

The GH200 NVIDIA Super Server is a cutting-edge server designed to meet the demanding requirements of high-performance computing.With its powerful NVIDIA GPU,it provides excellent performance and speed for artificial intelligence,deep learning,scientific research,and data analysis tasks.This server provides a range of configuration options to meet various workload intensities and can easily scale to meet growing computing needs.Its advanced cooling system ensures optimal operating temperature even under heavy workloads.GH200 has a robust and reliable design,making it the perfect choice for organizations seeking exceptional computing power.

feature

Powered by NVIDIA GPU for accelerated computing

High processing power and performance

Scalable and flexible configuration options

Advanced cooling system for efficient operation

Robust and reliable design for continuous heavy workloads

GH200 NVIDIA Super Server is a cutting-edge solution for high-performance computing and data processing.With its powerful NVIDIA GPU,it provides excellent performance for demanding computing tasks and data-intensive applications.The server aims to provide high-speed processing capabilities for efficient data analysis and model training.With its scalable and flexible configuration options,it can be customized to meet the specific requirements of various industries and applications.

The all-new AI supercomputer will have 256 NVIDIA Grace Hoppers™Superchips are fully connected to a single GPU,opening up huge potential in the AI era.NVIDIA DGX™GH200 aims to handle terabyte level models for large-scale recommendation systems,generative AI,and graphic analysis,providing 144TB of shared memory and linear scalability for massive AI models.

Scalable interconnection through NVLink,NVSwitch,and NVLink Switch systems

DGX GH200 extends NVIDIA between each Grace Hopper superchip using NVLink Switch system®NVLink®,Expanding to 256 GPUs.

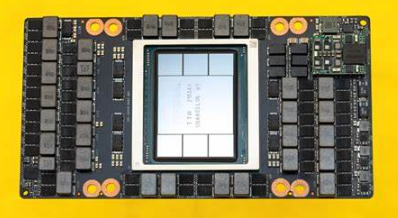

The large shared memory space of NVIDIA DGX GH200 is connected to 256 GH200 superchips through NVLink interconnect technology and NVLink Switch System,enabling them to operate as a single GPU.It provides 1 exaflop of performance and 144 TB of shared memory-nearly 500 times larger than the previous generation NVIDIA DGX A100 launched in 2020.

The GH200 superchip uses NVIDIA NVLink C2C chips for interconnection,and will be based on ARM’s NVIDIA Grace™The CPU is integrated with the NVIDIA H100 Tensor Core GPU,eliminating the need for traditional CPU to GPU PCIe connections.Compared with the latest PCIe technology,this has increased the bandwidth between the GPU and CPU by 7 times,reduced interconnect power consumption by more than 5 times,and provided a 600GB Hopper architecture GPU building module for the DGX GH200 supercomputer.

The DGX GH200 is the first supercomputer to pair the Grace Hopper superchip with the NVIDIA NVLink Switch System.This new interconnection method enables all GPUs in the DGX GH200 system to operate together as a whole.The previous generation system can only integrate 8 GPUs into one GPU through NVLink without affecting performance.

The DGX GH200 architecture has increased NVLink bandwidth by more than 48 times compared to the previous generation,enabling the ability to provide large-scale AI supercomputers on a single GPU through simple programming.

Providing Large Memory for Giant Models

The NVIDIA DGX GH200 is the only AI supercomputer that provides 144TB of super large shared memory space on 256 NVIDIA Grace Hopper superchip GPUs,providing developers with nearly 500 times more memory to build giant models

Ultra high energy efficiency calculation

Grace Hopper Superchip Brings NVIDIA Grace™CPU and NVIDIA Hopper™GPUs are combined in the same package,eliminating the need for traditional PCIe CPU to GPU connections,increasing bandwidth by 7 times and reducing interconnect power consumption by more than 5 times.

Integrated and ready to run

With a one-stop DGX GH200 deployment,large-scale models can be built in just weeks instead of months.This full stack data center level solution includes software and white glove services from design to deployment,thereby accelerating the return on AI investment.

application area

The GH200 NVIDIA super server is widely used in fields such as artificial intelligence,deep learning,scientific research,data analysis,and machine learning.

The United States has introduced new regulations on semiconductor export restrictions to China,including restrictions on the export of high-performance computing chips to Chinese Mainland.And the performance indicators of NVIDIA’s A100 chip are used as limiting standards.A regulated high-performance computing chip is one that meets both of the following conditions:

(1)The I/O bandwidth transmission rate of the chip is greater than or equal to 600 Gbyte/s;

(2)The sum of the bit length of each operation multiplied by the computing power calculated by TOPS in the”original computing unit of the digital processing unit”is greater than or equal to 4800 TOPS.This also makes it impossible for NVIDIA A100/H100 series and AMD MI200/300 series AI chips to be exported to China.

Reviews

There are no reviews yet.