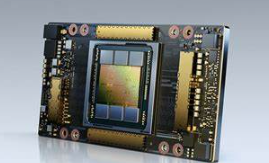

Product Description

The A100 NVIDIA Supermicro NV server is a powerful server designed for high-performance computing and data-intensive applications.It is equipped with the latest A100 GPU,providing excellent performance and efficiency for artificial intelligence,machine learning,and scientific computing workloads.

feature

A100 GPU architecture for accelerated computing

High memory bandwidth and capacity for large-scale data processing

Advanced cooling systems optimize performance and reliability

Flexible configuration options to meet different workload requirements

The NVIDIA A100 Tensor Core GPU can achieve excellent acceleration at different scales for AI,data analysis,and HPC application scenarios,effectively assisting high-performance elastic data centers.The A100 adopts the NVIDIA Ampere architecture and is the engine of the NVIDIA data center platform.The performance of the A100 is improved by up to 20 times compared to the previous generation product,and can be divided into seven GPU instances to dynamically adjust according to changing needs.The A100 offers two versions of graphics memory:40GB and 80GB.The A100 80GB doubles the GPU graphics memory and provides ultra fast graphics bandwidth(over 2 trillion bytes per second[TB/s]),which can handle very large models and datasets.

The NVIDIA A100 Tensor Core utilizes Tensor floating-point operation(TF32)accuracy to provide up to 20 times higher performance than the NVIDIA Volta without the need for code changes;If automatic mixing accuracy and FP16 are used,the performance can be further improved by two times.With NVIDIA®NVLink®、NVIDIA NVSwitch™、PCIe 4.0,NVIDIA®InfiniBand®And NVIDIA Magnum IO™When combined with the SDK,it can scale to thousands of A100 GPUs.

2048 A100 GPUs can handle training workloads such as BERT on a scale in one minute,which is a very fast problem-solving speed.

For ultra large models with large data tables(such as the Deep Learning Recommendation Model[DLRM]),the A100 80GB can provide up to 1.3TB of unified graphics memory per node,and the throughput is up to three times higher than the A100 40GB.

The NVIDIA A100 adopts a dual precision Tensor Core,achieving a huge leap in high-performance computing performance since the launch of GPUs.Combining 80GB of ultra fast GPU graphics memory,researchers can shorten 10 hours of dual precision simulation to less than 4 hours on the A100.HPC applications can also utilize TF32 to increase the throughput of single precision,dense matrix multiplication operations by up to 10 times.

For high-performance computing applications with large datasets,the A100 80GB increase in graphics memory capacity can increase throughput by up to twice when running material simulation Quantum Espresso.The enormous memory capacity and ultra fast memory bandwidth make the A100 80GB very suitable as a platform for the next generation of workloads.

The acceleration server equipped with A100 can provide necessary computing power and utilize large capacity graphics memory,over 2 TB/s graphics memory bandwidth,and NVIDIA®NVLink®And NVSwitch™Implement scalability to handle these workloads.By combining InfiniBand and NVIDIA Magnum IO™And RAPIDS™The open-source library suite(including the RAPIDS Accelerator for Apache Spark for GPU accelerated data analysis),the NVIDIA data center platform,can accelerate these large workloads and achieve ultra-high levels of performance and efficiency.

In the big data analysis benchmark,A100 80GB provides insight throughput twice that of A100 40GB,making it very suitable for handling new workloads with rapidly increasing dataset sizes.

A100 combined with MIG technology can greatly improve the utilization of GPU accelerated infrastructure.With the help of MIG,the A100 GPU can be divided into up to 7 independent instances,allowing multiple users to use the GPU acceleration function.With the help of A100 40GB,each MIG instance can be allocated up to 5GB,and as the A100 80GB graphics memory capacity increases,this size can double to 10GB.

MIG is used in conjunction with Kubernetes,containers,and server virtualization based on server virtualization platforms.MIG allows infrastructure managers to provide appropriately sized GPUs for each task while ensuring quality of service(QoS),thereby expanding the impact of accelerated computing resources to cover every user.

application area

The A100 NVIDIA Supermicro NV server is very suitable for various applications,including deep learning and AI model training,high-performance computing(HPC),scientific simulation and research,data analysis and visualization,virtualization,and cloud computing.

The United States has introduced new regulations on semiconductor export restrictions to China,including restrictions on the export of high-performance computing chips to Chinese Mainland.And the performance indicators of NVIDIA’s A100 chip are used as limiting standards.A regulated high-performance computing chip is one that meets both of the following conditions:

(1)The I/O bandwidth transmission rate of the chip is greater than or equal to 600 Gbyte/s;

(2)The sum of the bit length of each operation multiplied by the computing power calculated by TOPS in the”original computing unit of the digital processing unit”is greater than or equal to 4800 TOPS.This also makes it impossible for NVIDIA A100/H100 series and AMD MI200/300 series AI chips to be exported to China.

Reviews

There are no reviews yet.