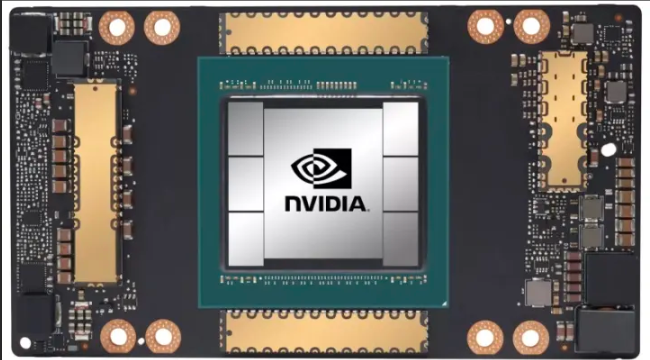

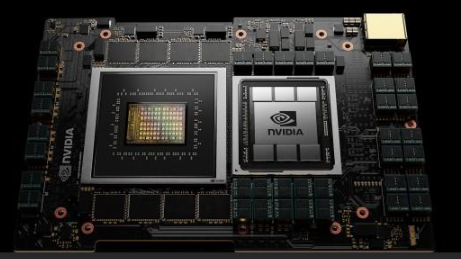

Product Description

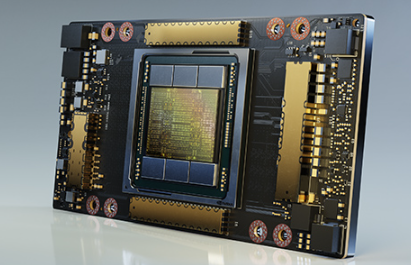

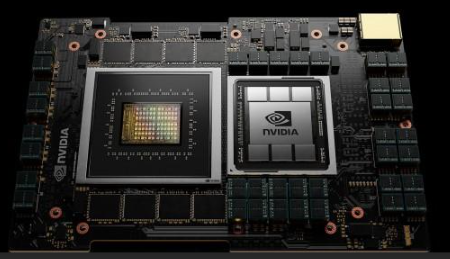

The H100 NVIDIA PCIE single card is a cutting-edge graphics processing unit designed for intensive computing workloads. With its powerful NVIDIA GPU, high memory capacity, and advanced cooling system, it provides excellent performance and reliability for various applications.

feature

Powerful NVIDIA GPU for accelerated computing

High storage capacity and bandwidth for efficient data processing

Advanced cooling system for optimal performance and reliability

The H100 NVIDIA PCIE single card is a powerful graphics processing unit designed for demanding applications that require high-performance computing and graphics functionality. It is equipped with a high-performance NVIDIA GPU and has a PCIe interface, which can seamlessly connect with compatible systems. The single card design ensures convenient installation and high space utilization. In addition, advanced cooling systems help maintain optimal operating temperature for reliable performance.

AI is utilizing a wide range of neural networks to address a wide range of business challenges. An excellent AI inference accelerator not only needs to provide exceptional performance, but also utilizes universality to accelerate these neural networks.

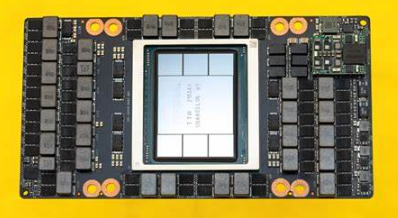

The H100 further expands NVIDIA’s market leading position in the field of inference, with multiple advanced technologies that can increase inference speed by 30 times and provide ultra-low latency. The fourth generation Tensor Core can accelerate all accuracy (including FP64, TF32, FP32, FP16, and INT8). The Transformer engine can combine FP8 and FP16 accuracy to reduce memory usage and improve performance, while still maintaining the accuracy of large language models.

The performance of the NVIDIA data center platform continues to improve, surpassing Moore’s Law. The new breakthrough AI performance of H100 further strengthens the power of HPC+AI, accelerating the exploration of scientists and researchers, allowing them to fully devote themselves to their work and solve the major challenges facing the world.

H100 increases the floating-point operations per second (FLOPS) of the double precision Tensor Core by three times, providing HPC with 60 teraFLOPS of FP64 floating-point operations. High performance computing applications that integrate AI can utilize the TF32 accuracy of H100 to achieve a throughput of 1 petaFLOP, thereby achieving single precision matrix multiplication without changing the code.

The H100 also adopts the DPX instruction, which has a performance 7 times higher than the NVIDIA A100 Tensor Core GPU and is 40 times faster in dynamic programming algorithms (such as Smith Waterman for DNA sequence alignment) than servers that only use traditional dual CPU.

In the process of AI application development, data analysis usually consumes most of the time. The reason is that large datasets are scattered across multiple servers, and a horizontally scalable solution consisting of only commercial CPU servers lacks scalable computing performance, leading to difficulties.

The acceleration server equipped with H100 can provide corresponding computing power, and utilize the 3 TB/s graphics storage bandwidth and scalability of NVLink and NVSwitch per GPU to handle data analysis with high performance and support large datasets through scaling. By combining NVIDIA Quantum-2 InfiniBand, Magnum IO software, GPU accelerated Spark 3.0, and NVIDIA RAPIDS ™, The NVIDIA data center platform can accelerate these large workloads with excellent performance and efficiency.

application area

The H100 NVIDIA PCIE single card is widely used in fields such as artificial intelligence research and development, scientific research and simulation, data analysis, and machine learning.

The United States has introduced new regulations on semiconductor export restrictions to China, including restrictions on the export of high-performance computing chips to Chinese Mainland. And the performance indicators of NVIDIA’s A100 chip are used as limiting standards. A regulated high-performance computing chip is one that meets both of the following conditions:

(1) The I/O bandwidth transmission rate of the chip is greater than or equal to 600 Gbyte/s;

(2) The sum of the bit length of each operation multiplied by the computing power calculated by TOPS in the “original computing unit of the digital processing unit” is greater than or equal to 4800 TOPS. This also makes it impossible for NVIDIA A100/H100 series and AMD MI200/300 series AI chips to be exported to China.

Reviews

There are no reviews yet.